Field App

FIELD APP CONCEPTUALISATION AND DESIGN

The main project I worked on during my time at SUSLIB was conceptualising and then designing UI and UX for the flagship Field App. The app is a personalized cultural discovery platform that used AR to bridge physical and digital collections. Working closely with co-founder and head of design Martijn de Heer, we created the main mobile interafce that replicated the feeling of entering one’s own research space—with SUSLIB’s software as a research assistant. The user could serendipitiously stumble on loosely related content, which through indirect and non-obvious connections to her own interests, could spark new ideas. The pieces were just laying around, barely organized, and yet supported by what the users who interacted with similar pieces of content connected to it. The system was reminiscent at that existing at Sitterwerk library [URL]

The app featured an individually-customized dashboard that assembled each user's intellectual essence through recommendations, saved excerpts, and contextual connections. Within the app, the user could find books, articles, podcast or movie excerpts, exhibition and cultural event recommendations—all customised to the specific interests of the user and earlier indicatioons of interest.

One of the key features was the AR annotation system that allowed seamless note-taking across physical books using computer vision and page recognition. Field enabled users to point their camera at any book page, automatically segment it into paragraphs, and add multimedia annotations - text, links, or images. These annotations were stored in the system and could be shared with other users, creating collective layers of knowledge over physical objects.

Later, the annotations could be found in the app. For every annoted book we added a navigation stripe at the top so that, like with a physically marked book, the user could have an overview of which parts of the book are most highly annotated and have an easy way of “scrolling through then”

We wanted to allow users to annotate the media not only with text, but also images—and make the process as smooth and possible. Thanks to our objecrt recognition software, one could point their camera at an object and instead of attaching the whole picture, the app automatically craeted a cut-out of the specific object selected by the user.

Another function driven by object recognition was that the user could point at an object and get recommendations of materials that could contextualise the selected object. Additionally, the user could swipe between two objects within the camera filed and explore the relationship between them through added recommendations.

SOFTWARE TRAINING AND OBJECT RECOGNITION

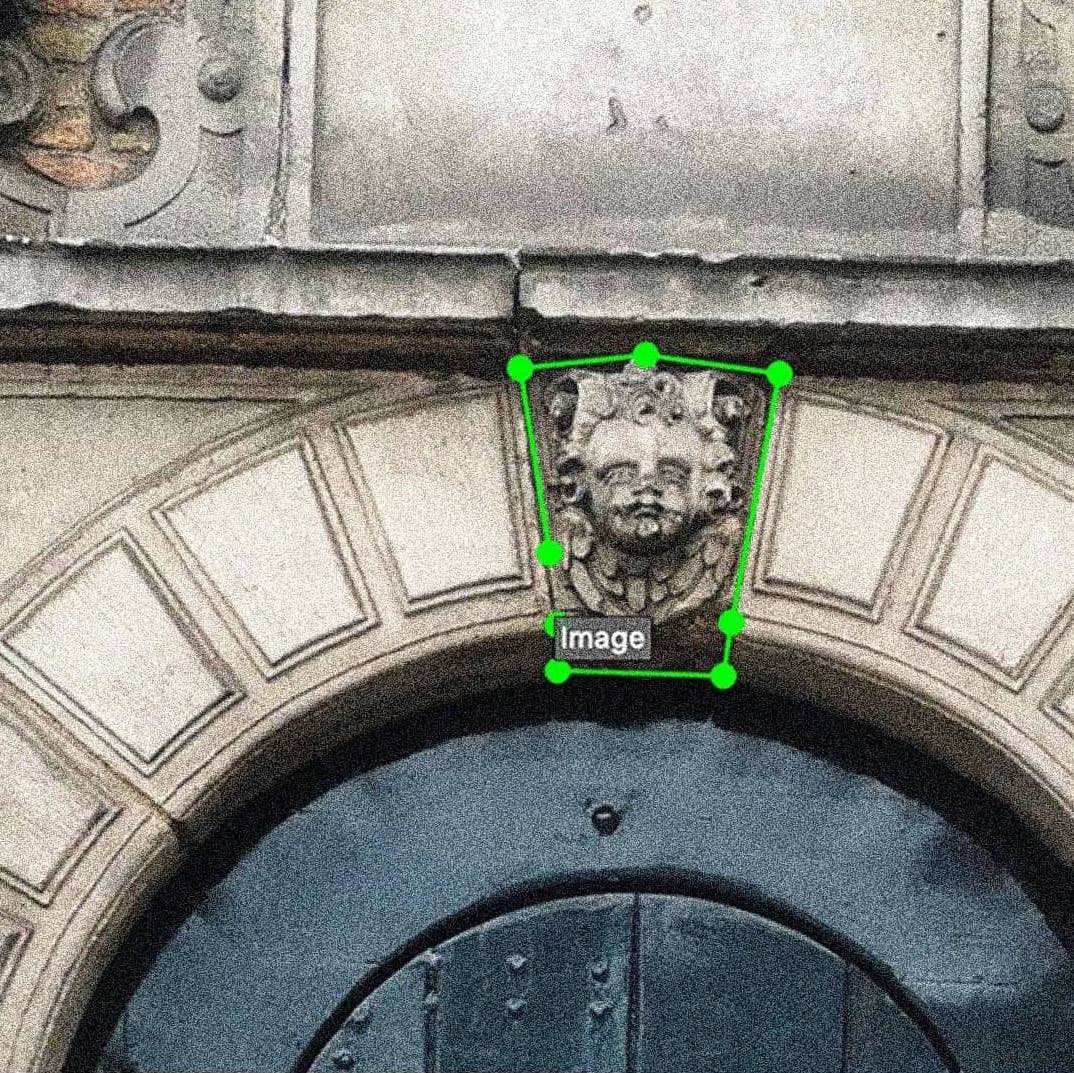

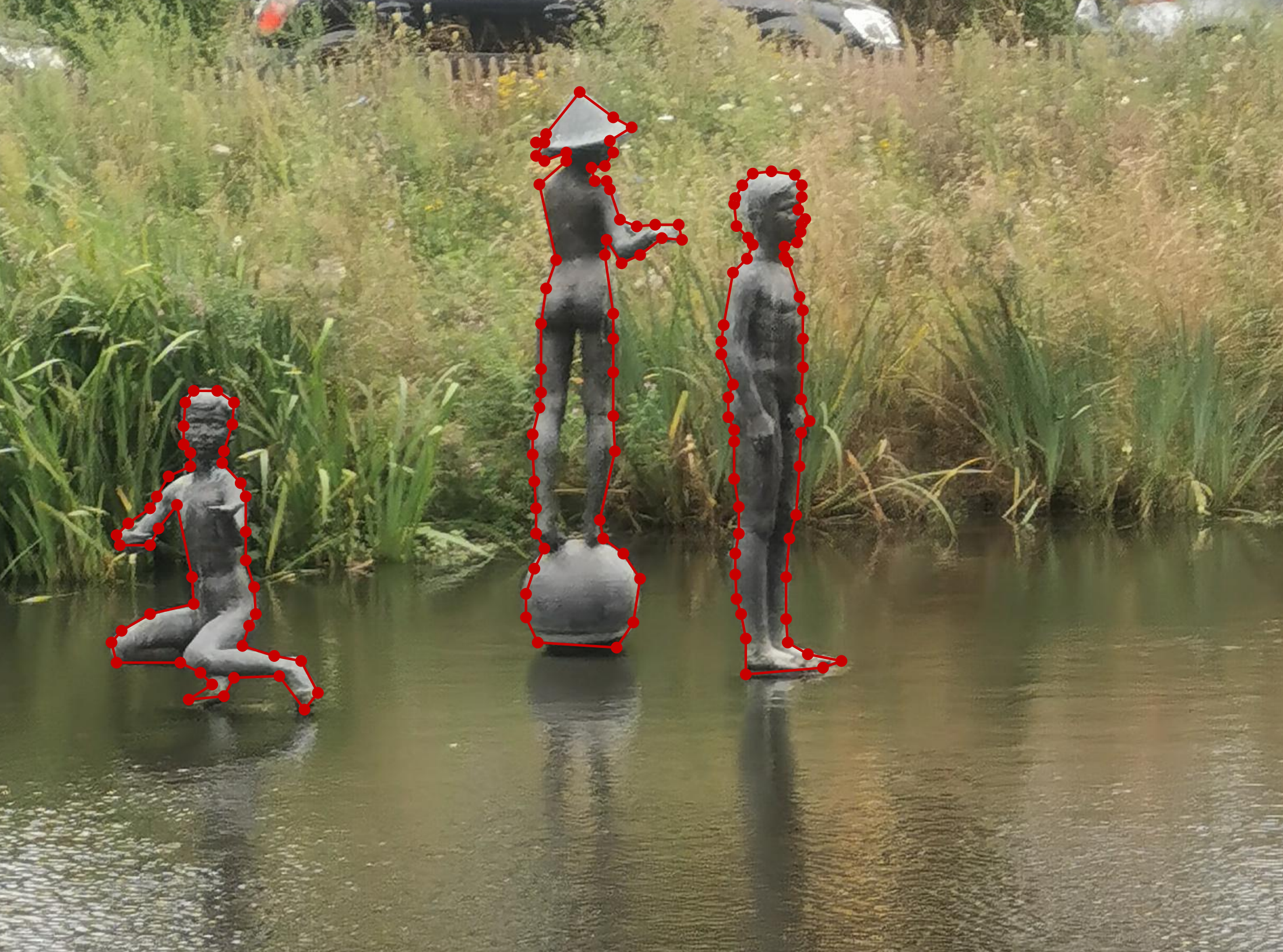

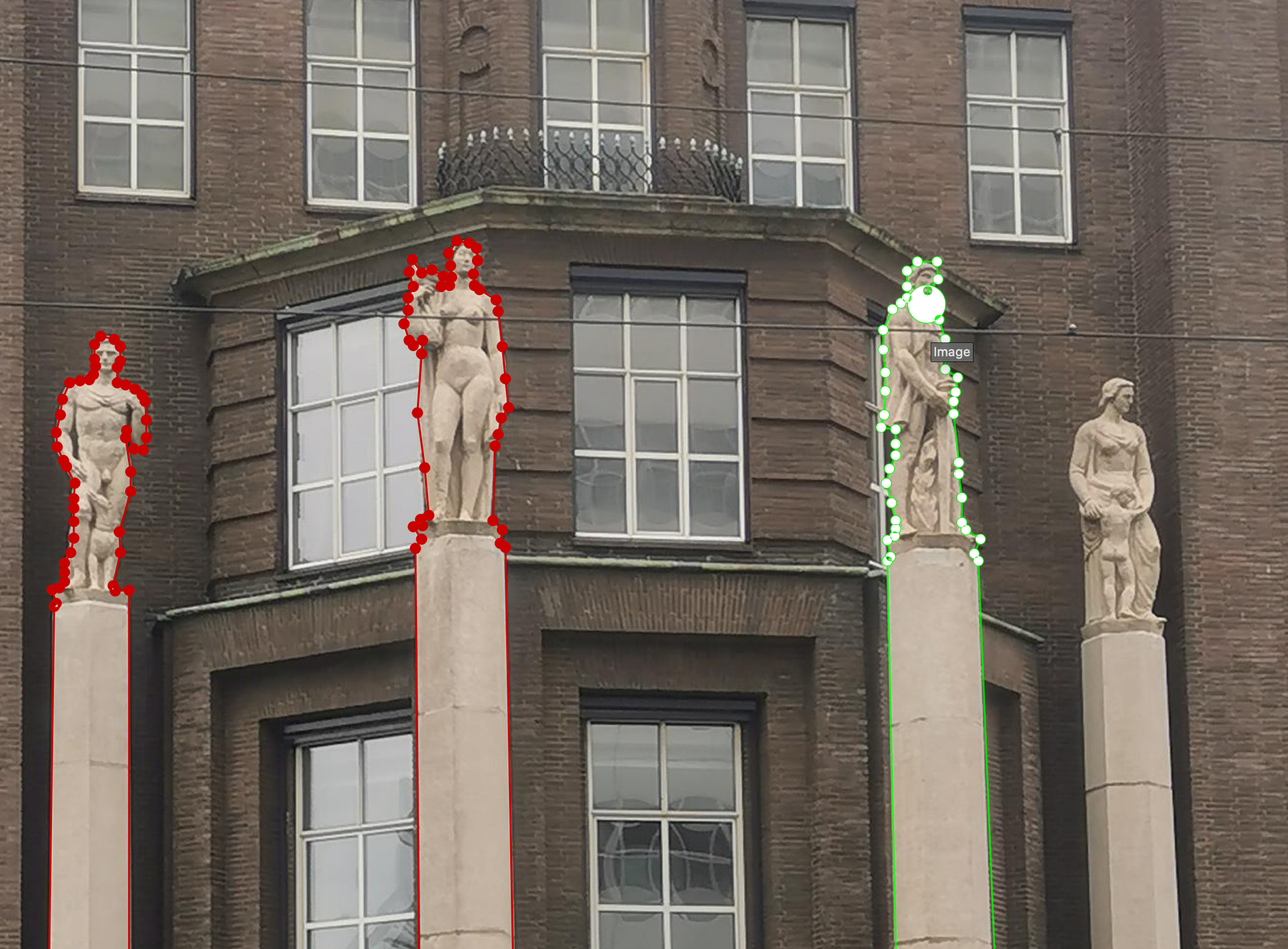

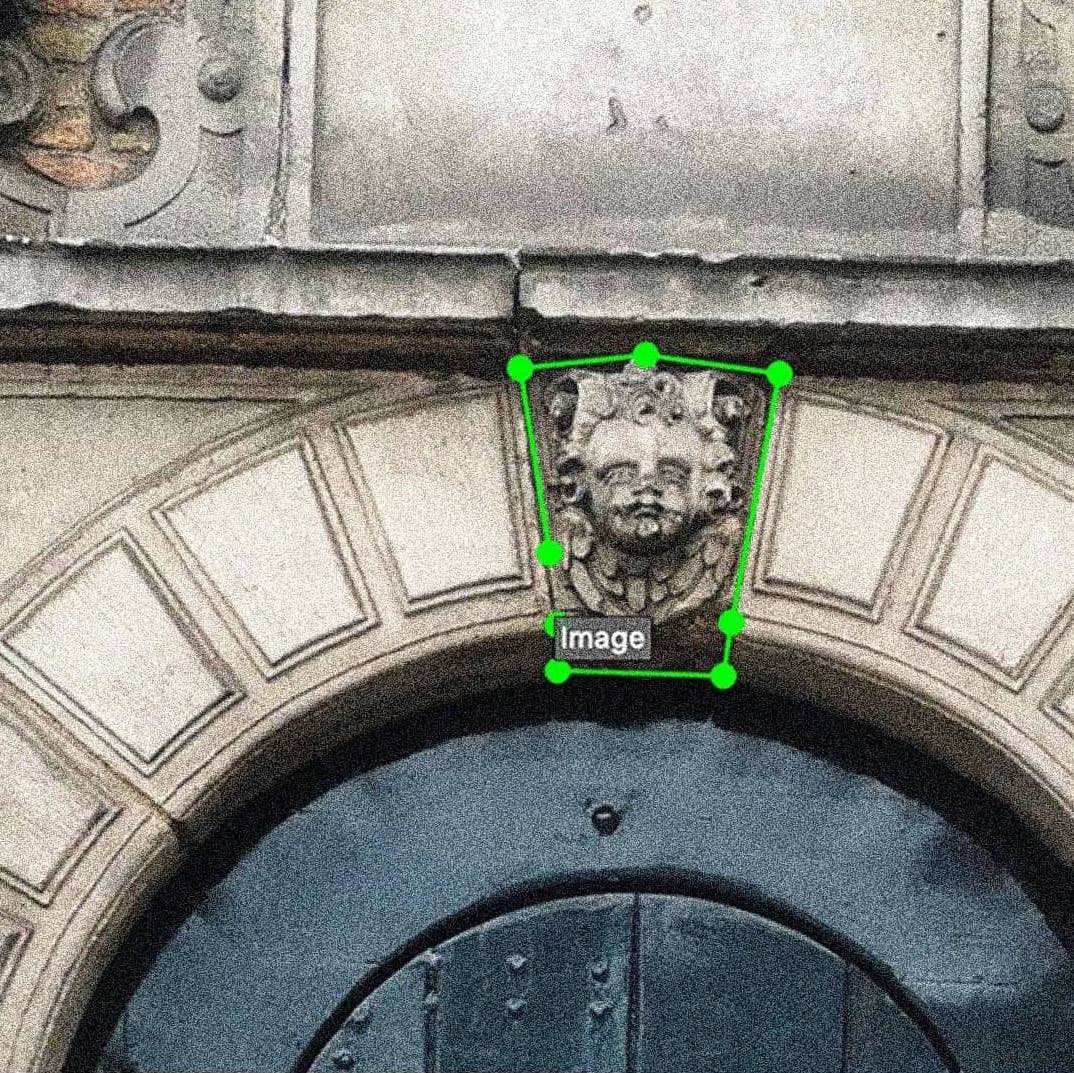

SUSLIB’s software heavily relied on AR-based interaction and object recognition. In order to customise the object recognition funtionality to our needs and have full control over both input and output, we trained our own recognition models.

We weere interested in craeting context-specific models, so one of our models was trained on the data gathered in The Hague, where our studio was located. We made pictures of all sculptures we could find in the city and then segmented them. Later on, the dataset and trained software could be used for context-specific object recognition and finding connections within the inherently limited environment of The Hague context

PUBLIC SPACES AND INTENTION RECOGNITION

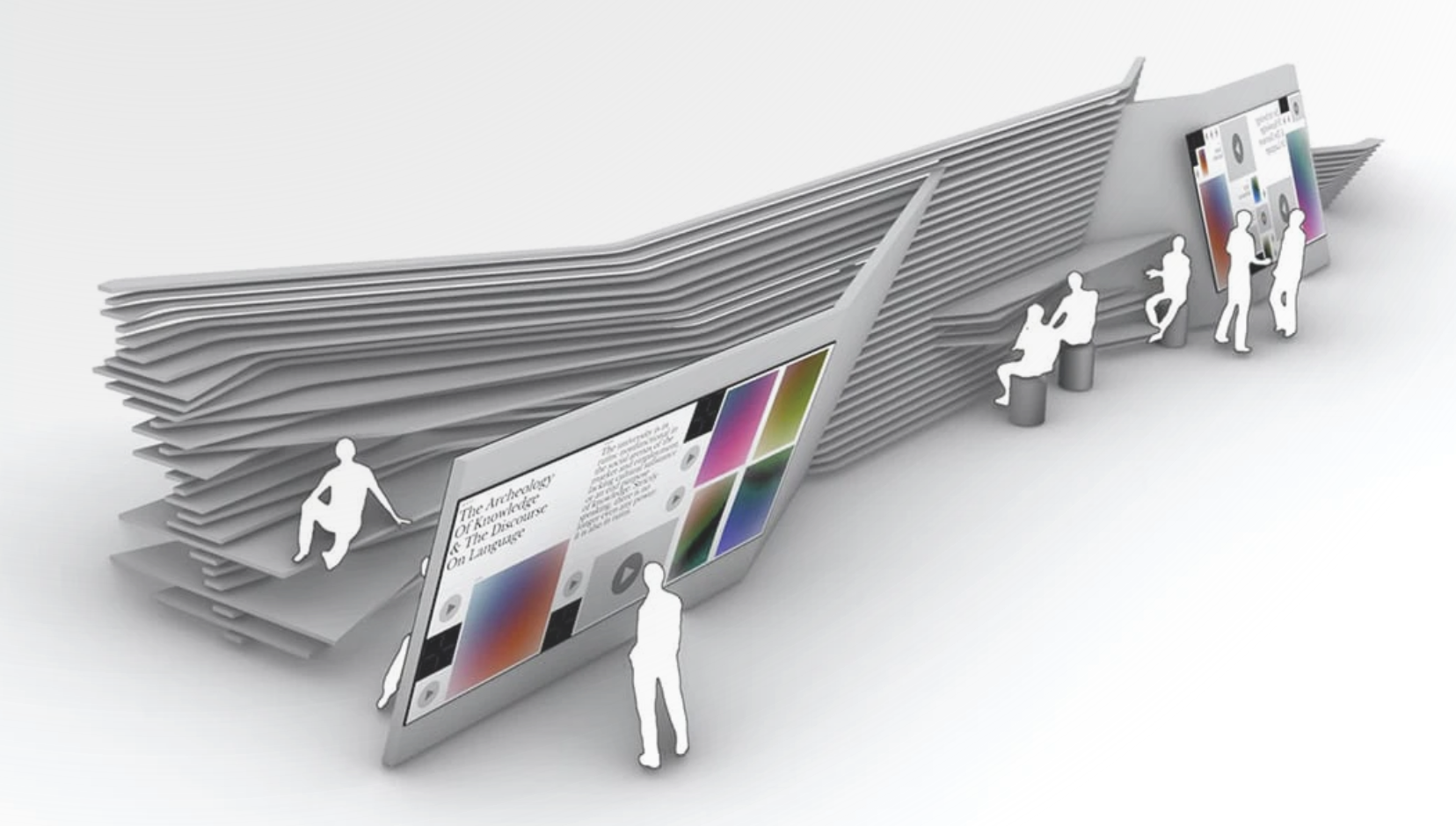

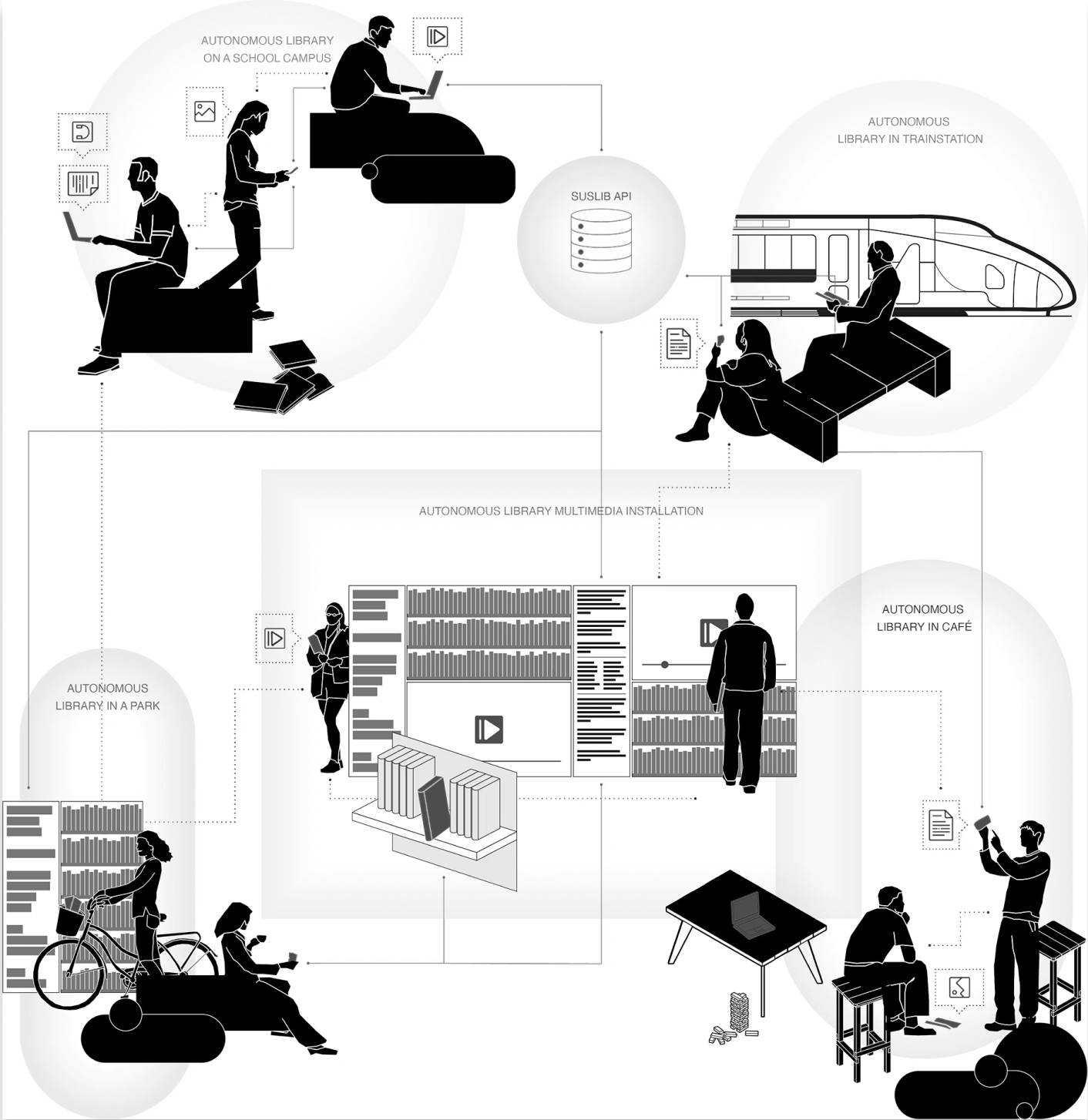

The most large-scale project at SUSLIB was creating “autonmous libraries”—public knowledge spaces that would push the resources mostly confined to libraries and archives, out into the public. The spaces would encourage a more meaningul spending of time in liminal spaces such as stations or airports, but also providing spatial solution and software for campuses, cafes and even parks.

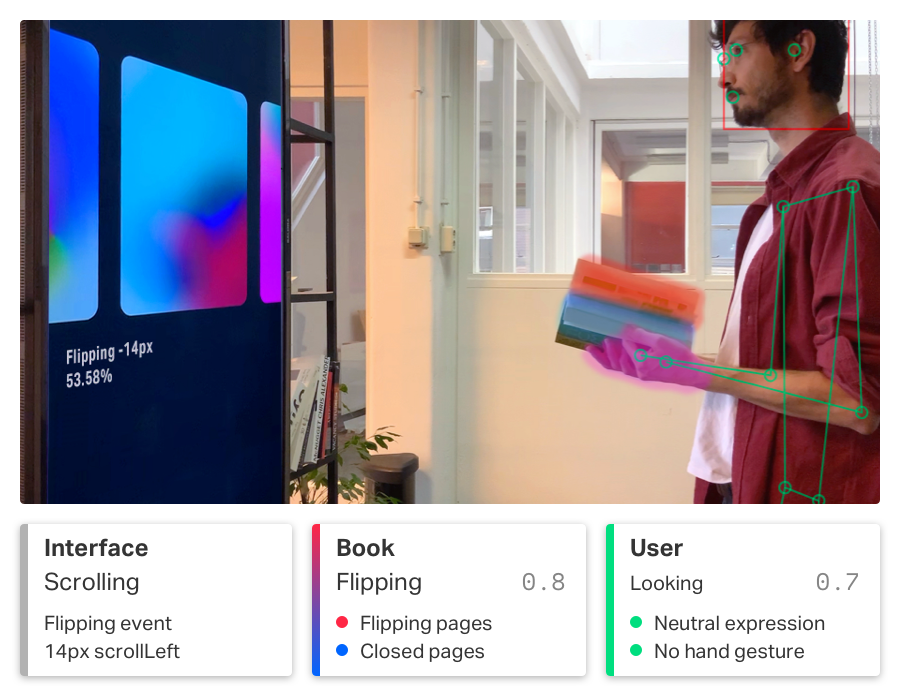

SUSLIB was mostly active in the midst of COVID-19 pandemic and so creating a public spaces that would eliminate need for hardware (such as miuses, keyboards etc) and instead relied on touchless interaction was a very current topic of exploration. As with object recognition, here also we created our own software for “intention recogniion” or simply, gesture recognition. I researched gesture-based interactions from existing AR technologies and analyzed sci-fi media to understand intuitive movement patterns. Through user testing, we identified the most natural gestures for functions like scrolling, selection, and navigation.

One unique interaction I developed was "book flipping" - the system could recognize when users physically flipped through books and display corresponding digital information based on the detected page position. This bridged physical and digital interaction in an entirely new way.

The SUSLIB products gained significant interest from major institutions such as OBA (Amsterdam Public Library) and gained funding from Stimuliringsfonds, which support innovative contributions to art and culture in the Netherlands. While SUSLIB eventually ceased development in 2022, the innovative approaches to AR-enhanced knowledge discovery and touchless interaction continue to inform my understanding of designing for emerging technologies in cultural spaces.

OTHER PROJECTS ↓

Strefy Czasowe

Full branding and art direction for a festival in the night of time change

ARIAS

Brand new look for research through arts & sciences in Amsterdam

Eye of Jeronimo

Branding for an independent kaleidoscope producer

Basketology

A philosophy for alternative storytelling

Lectorate: Touching

Visual campaign for a publication about research by touch

Anyone Want That?

A digital research into exchange of materials between art academy students

Building Second Brain

Moving poster for a lecture about memory outsourcing

Field App

Designing interfaces and interactions for the future of knowledge exploration